Lead2Pass: Latest Free Oracle 1Z0-060 Dumps (81-90) Download!

QUESTION 81

Which four actions are possible during an Online Data file Move operation?

A. Creating and dropping tables in the data file being moved

B. Performing file shrink of the data file being moved

C. Querying tables in the data file being moved

D. Performing Block Media Recovery for a data block in the data file being moved

E. Flashing back the database

F. Executing DML statements on objects stored in the data file being moved

Answer: ACEF

Explanation:

Incorrect:

Not B:The online move data file operation may get aborted if the standby recovery process takes the data file offline, shrinks the file(not B), or drops the tablespace.

Not D:The online move data file operation cannot be executed on physical standby while standby recovery is running in a mounted but not open instance.

Note:

You can move the location of an online data file from one physical file to another physical file while the database is actively accessing the file. To do so, you use the SQL statement ALTER DATABASE MOVE DATAFILE.

An operation performed with the ALTER DATABASE MOVE DATAFILE statement increases the availability of the database because it does not require that the database be shut down to move the location of an online data file. In releases prior to Oracle Database 12c Release 1 (12.1), you could only move the location of an online data file if the database was down or not open, or by first taking the file offline.

You can perform an online move data file operation independently on the primary and on the standby (either physical or logical). The standby is not affected when a data file is moved on the primary, and vice versa.

QUESTION 82

Your multitenant container database (CDB) contains a pluggable database, HR_PDB. The default permanent tablespace in HR_PDB is USERDATA. The container database (CDB) is open and you connect RMAN.

You want to Issue the following RMAN command:

RMAN > BACKUP TABLESPACE hr_pdb:userdata;

Which task should you perform before issuing the command?

A. Place the root container in ARHCHIVELOG mode.

B. Take the user data tablespace offline.

C. Place the root container in the nomount stage.

D. Ensure that HR_PDB is open.

Answer: D

Explanation:

To back up tablespaces or data files:

Start RMAN and connect to a target database and a recovery catalog (if used).

If the database instance is not started, then either mount or open the database.

Run the BACKUP TABLESPACE command or BACKUP DATAFILE command at the RMAN prompt.

QUESTION 83

Identify three scenarios in which you would recommend the use of SQL Performance Analyzer to analyze impact on the performance of SQL statements.

A. Change in the Oracle Database version

B. Change in your network infrastructure

C. Change in the hardware configuration of the database server

D. Migration of database storage from non-ASM to ASM storage

E. Database and operating system upgrade

Answer: ACE

Explanation:

Oracle 11g/12cmakes further use of SQL tuning sets with the SQL Performance Analyzer, which compares the performance of the statements in a tuning set before and after a database change. The database change can be as major or minor as you like, such as:

* (E)Database, operating system, or hardware upgrades.

* (A,C)Database, operating system, or hardware configuration changes.

*Database initialization parameter changes.

*Schema changes, such as adding indexes or materialized views.

*Refreshing optimizer statistics.

*Creating or changing SQL profiles.

QUESTION 84

Which two statements are true about the RMAN validate database command?

A. It checks the database for intrablock corruptions.

B. It can detect corruptpfiles.

C. It can detect corruptspfiles.

D. It checks the database for interblock corruptions.

E. It can detect corrupt block change tracking files.

Answer: AD

Explanation:

Oracle Database supports different techniques for detecting, repairing, and monitoring block corruption. The technique depends on whether the corruption is interblock corruption or intrablock corruption. In intrablock corruption, the corruption occurs within the block itself. This corruption can be either physical or logical. In an interblock corruption, the corruption occurs between blocks and can only be logical.

Note:

*The main purpose of RMAN validation is to check for corrupt blocks and missing files. You can also use RMAN to determine whether backups can be restored. You can use the following RMAN commands to perform validation:

VALIDATE

BACKUP … VALIDATE

RESTORE … VALIDATE

QUESTION 85

You install a non-RAC Oracle Database. During Installation, the Oracle Universal Installer (OUI) prompts you to enter the path of the Inventory directory and also to specify an operating system group name.

Which statement is true?

A. The ORACLE_BASE base parameter is not set.

B. The installation is being performed by the root user.

C. The operating system group that is specified should have the root user as its member.

D. The operating system group that is specified must have permission to write to the inventory directory.

Answer: D

Explanation:

Note:

Providing a UNIX Group Name

If you are installing a product on a UNIX system, the Installer will also prompt you to provide the name of the group which should own the base directory.

You must choose a UNIX group name which will have permissions to update, install, and deinstall Oracle software. Members of this group must have write permissions to the base directory chosen.

Only users who belong to this group are able to install or deinstall software on this machine.

QUESTION 86

You are required to migrate your 11.2.0.3 database as a pluggable database (PDB) to a multitenant container database (CDB).

The following are the possible steps to accomplish this task:

1. Place all the user-defined tablespace in read-only mode on the source database.

2. Upgrade the source database to a 12c version.

3. Create a new PDB in the target container database.

4. Perform a full transportable export on the source database with the VERSION parameter set to 12 using the expdp utility.

5. Copy the associated data files and export the dump file to the desired location in the target database.

6. Invoke the Data Pump import utility on the new PDB database as a user with the DATAPUMP_IMP_FULL_DATABASE role and specify the full transportable import options.

7. Synchronize the PDB on the target container database by using the DBMS_PDS.SYNC_ODB function.

Identify the correct order of the required steps.

A. 2, 1, 3, 4, 5, 6

B. 1, 3, 4, 5, 6, 7

C. 1, 4, 3, 5, 6, 7

D. 2, 1, 3, 4, 5, 6, 7

E. 1, 5, 6, 4, 3, 2

Answer: A

Explanation:

Step 0: (2) Upgrade the source database to 12c version.

Note:

Full Transportable Export/Import Support for Pluggable Databases Full transportable export/import was designed with pluggable databases as a migration destination.

You can use full transportable export/import to migrate from a non-CDB database into a PDB, from one PDB to another PDB, or from a PDB to a non-CDB. Pluggable databases act exactly like nonCDBs when importing and exporting both data and metadata.

The steps for migrating from a non-CDB into a pluggable database are as follows:

Step 1.(1)Set the user and application tablespaces in the source database to be READ ONLY Step 2.(3)Create a new PDB in the destination CDB using the create pluggable database command

Step3.(5)Copy the tablespace data files to the destination

Step4.(6)Using an account that has the DATAPUMP_IMP_FULL_DATABASE privilege, either 6)Export from the source database using expdp with the FULL=Y TRANPSORTABLE=ALWAYS options, and import into the target database using impdp, or ?Import over a database link from the source to the target using impdp

Step 5. Perform post-migration validation or testing according your normal practice

QUESTION 87

In your multitenant container database (CDB) with two pluggable database (PDBs). You want to create a new PDB by using SQL Developer.

Which statement is true?

A. The CDB must be open.

B. The CDB must be in the mount stage.

C. The CDB must be in the nomount stage.

D. Alt existing PDBs must be closed.

Answer: A

Explanation:

*Creating a PDB

Rather than constructing the data dictionary tables that define an empty PDB from scratch, and then populating its Obj$ and Dependency$ tables, the empty PDB is created when the CDB is created. (Here, we use empty to mean containing no customer-created artifacts.) It is referred to as the seed PDB and has the name PDB$Seed. Every CDB non-negotiably contains a seed PDB; it is non-negotiably always open in read-only mode. This has no conceptual significance; rather, it is just an optimization device. The create PDB operation is implemented as a special case of the clone PDB operation. The size of the seed PDB is only about 1 gigabyteand it takes only a few seconds on a typical machine to copy it.

QUESTION 88

Which two statements are true about the Oracle Direct Network File system (DNFS)?

A. It utilizes the OS file system cache.

B. A traditional NFS mount is not required when using Direct NFS.

C. Oracle Disk Manager can manage NFS on its own, without using the operating kernel NFS driver.

D. Direct NFS is available only in UNIX platforms.

E. Direct NFS can load-balance I/O traffic across multiple network adapters.

Answer: CE

Explanation:

E:Performance is improved by load balancing across multiple network interfaces (if available).

Note:

* To enableDirect NFS Client, you must replace the standard Oracle Disk Manager (ODM) librarywith one that supports Direct NFS Client.

Incorrect:

Not A:Direct NFS Client is capable of performing concurrent direct I/O, which bypasses any operating system level caches and eliminates any operating system write-ordering locks

Not B:

*To use Direct NFS Client, the NFS file systems must first be mounted and available over regular NFS mounts.

*Oracle Direct NFS (dNFS) is an optimized NFS (Network File System) client that provides faster and more scalable access to NFS storage located on NAS storage devices (accessible over TCP/IP).

Not D:Direct NFS is provided as part of the database kernel, and is thus available on all supported database platforms – even those that don’t support NFS natively, like Windows.

Note:

*Oracle Direct NFS (dNFS) is an optimized NFS (Network File System) client that provides faster and more scalable access to NFS storage located on NAS storage devices (accessible overTCP/IP). Direct NFS is built directly into the database kernel – just like ASM which is mainly used when using DAS or SAN storage.

*Oracle Direct NFS (dNFS) is an internal I/O layer that provides faster access to large NFS files than traditional NFS clients.

QUESTION 89

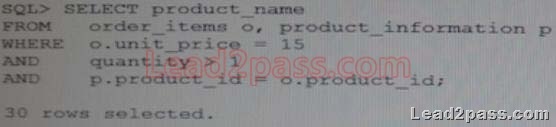

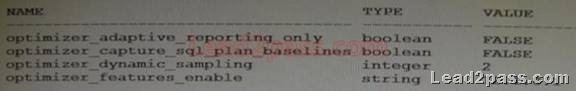

Examine the parameters for your database instance:

Which three statements are true about the process of automatic optimization by using cardinality

feedback?

A. The optimizer automatically changes a plan during subsequent execution of a SQL statement if there is

a huge difference in optimizer estimates and execution statistics.

B. The optimizer can re optimize a query only once using cardinality feedback.

C. The optimizer enables monitoring for cardinality feedback after the first execution of a query.

D. The optimizer does not monitor cardinality feedback if dynamic sampling and multicolumn statistics are

enabled.

E. After the optimizer identifies a query as a re-optimization candidate, statistics collected by the collectors

are submitted to the optimizer.

Answer: ACD

Explanation:

C:During the first execution of a SQL statement, an execution plan is generated as usual.

D:if multi-column statistics are not present for the relevant combination of columns, the optimizer can fall back on cardinality feedback.

(not B)*Cardinality feedback. This feature, enabled by default in 11.2, is intended to improve plans for repeated executions.

optimizer_dynamic_sampling

optimizer_features_enable

*dynamic sampling or multi-column statistics allow the optimizer to more accurately estimate selectivity of conjunctive predicates.

Note:

*OPTIMIZER_DYNAMIC_SAMPLING controls the level of dynamic sampling performed by the optimizer.

Range of values.0 to 10

*Cardinality feedback was introduced in Oracle Database 11gR2. The purpose of this feature is to automatically improve plans for queries that are executed repeatedly, for which the optimizer does not estimate cardinalities in the plan properly. The optimizer may misestimate cardinalities for a variety of reasons, such as missing or inaccurate statistics, or complex predicates. Whatever the reason for the misestimate, cardinality feedback may be able to help.

QUESTION 90

Which three statements are true when the listener handles connection requests to an Oracle 12c

database instance with multithreaded architecture enabled In UNIX?

A. Thread creation must be routed through a dispatcher process

B. The local listener may spawn a now process and have that new process create a thread

C. Each Oracle process runs an SCMN thread.

D. Each multithreaded Oracle process has an SCMN thread.

E. The local listener may pass the request to an existing process which in turn will create a thread.

Answer: ADE

If you want to pass the Oracle 12c 1Z0-060 exam sucessfully, recommend to read latest Oracle 12c 1Z0-060 Dumps full version.

![clip_image002[4] clip_image002[4]](http://examgod.com/l2pimages/Lead2PassLatestFreeOracle1Z0060Dumps4150_97D1/clip_image0024_thumb.jpg)